Top 3 Kafka Streams Challenges

We’ve been writing a lot about challenges lately. We recently wrote about API challenges – now we’re discussing challenges with Kafka Streams. Volt is very big on Kafka, as we specialize in complementing it in a really powerful way to allow enterprises to get the most out of it. That said, a lot of companies [...]

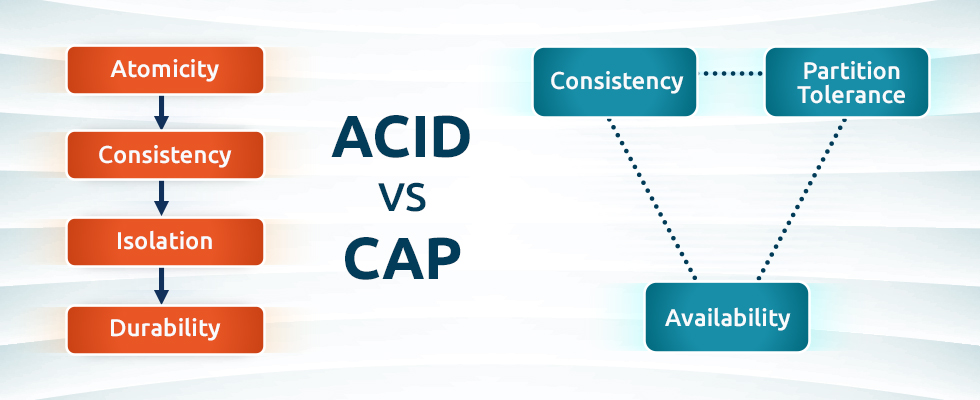

ACID vs CAP: What’s the Difference?

Acid transactions, ACID properties, and the CAP theorem are two important concepts in data management and distributed systems. It’s unfortunate that in both acronyms the “C” stands for “Consistency,” but actually means completely different things. What follows is a primer on the two concepts and an explanation of the differences between the two “C”s. What […]

The 5 Biggest Challenges With APIs

Over the last couple of years, we’ve heard a lot of breathless talk about application programming interfaces (APIs) and how they’re going to change everything. APIs exist to solve a fundamental problem: how can a group of people use stuff written by a different group of people, who may have had different ideas and expectations? [...]

How Caches Become Databases – And Why You Don’t Want This

Companies like to complicate things. There’s no better way to say it. The law of entropy clearly applies as much to tech stacks and data management as it does to closets and desk drawers. In the world of databases, data management, and data platforms, this entropy usually takes the form of a simple database or [...]

The 6 Rules for Achieving (and Maintaining) High Availability

In the age of big-data-turned-massive-data, maintaining high availability, aka ultra-reliability, aka ‘uptime’, has become “paramount”, to use a ChatGPT word. Why? Because until recently, most computer interaction was human-to-computer, and humans are (relatively) flexible when systems misbehave: they can try a few more times and then go off and do something else before trying again [...]

Latency vs. Throughput: Navigating the Digital Highway

Imagine the digital world as a bustling highway, where data packets are vehicles racing to their destinations. In this fast-paced ecosystem, two vital elements determine the efficiency of this traffic: latency and throughput. Let’s unravel the mystery behind these terms and examine their significance in our everyday online experiences. Latency: The Waiting Game Latency is [...]